A technician reviews next-generation AI accelerator hardware that reflects the rising influence of TPU technology.

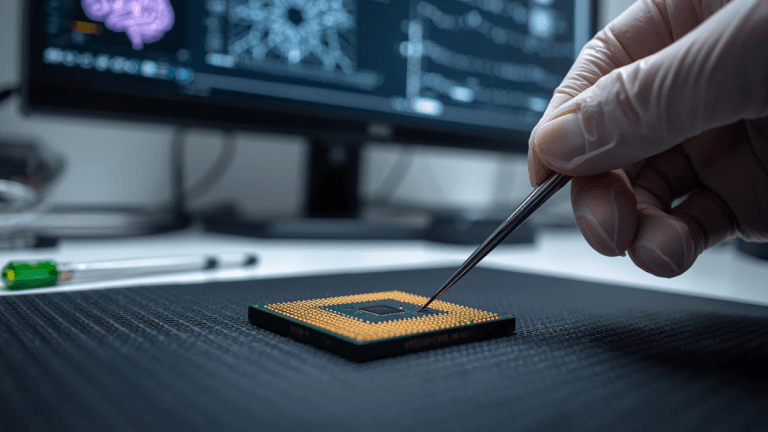

The Tensor Processing Units, or TPUs, are no longer a secret weapon. For years, these custom-designed silicon chips were the engine powering Google’s most advanced artificial intelligence services, quietly optimizing everything from Search results to YouTube recommendations.

Now, that proprietary fortress is opening up, marking a decisive escalation in the global AI chip wars and a direct threat to the current undisputed sovereign of the space, Nvidia.

This is more than just a new product launch; it’s a strategic maneuver that cuts to the heart of hyperscaler economics and the future of AI infrastructure. Why does this development matter now, and how can a custom chip architecture truly challenge a company that defines the state of the art?

The Architecture of Supremacy and Subversion

At the core of this conflict is the fundamental difference in how AI models are trained and run. For years, the industry standard has been the Graphics Processing Unit (GPU), specifically those made by Nvidia.

Nvidia’s platform offers unparalleled versatility. Its CUDA software ecosystem has become the universal language for AI development; virtually any model, from small research projects to massive language models, can run on Nvidia hardware, and run well.

Take the evolution of Nvidia’s flagship chips. The Hopper-based H100 GPU is a marvel of engineering, boasting 80 billion transistors and capable of delivering up to 4 petaFLOPS of AI performance. Its successor, the Blackwell-based GB200, pushes the limits further, dramatically increasing memory capacity and aiming for around 20 petaFLOPS.

Nvidia isn’t just selling chips; it’s selling an entire, established, and continuously evolving ecosystem that researchers trust.

Google’s Tensor Processing Units, however, represent an entirely different approach: domain-specific acceleration. Unlike the general-purpose flexibility of a GPU, the TPU is purpose-built to execute the matrix multiplication operations that are the computational backbone of deep learning models.

This laser focus allows TPUs to achieve incredible efficiency and speed for certain tasks, specifically training and inference within the Google ecosystem.

The current strategic pivot, evident in discussions with giants like Meta about acquiring TPUs, is Google’s aggressive attempt to turn its internal advantage into an industry platform.

The Economics of Custom Silicon

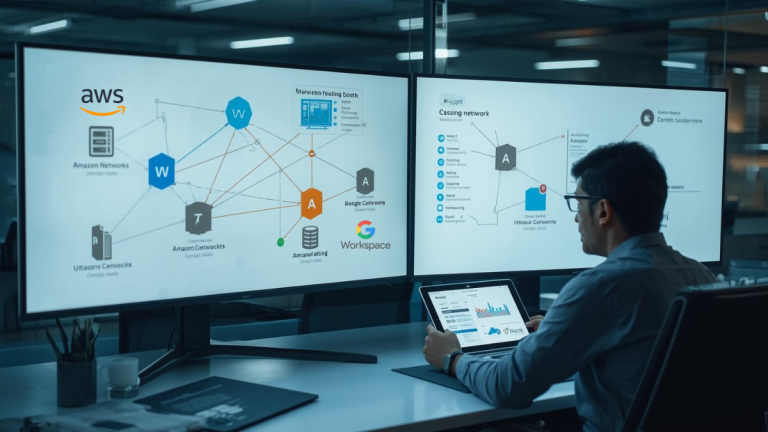

To understand the stakes, we must look at the hyperscalers, the massive companies like Google, Amazon, and Meta that operate the cloud infrastructure everyone else builds upon. These companies spend billions procuring specialized hardware.

When a company uses its own custom silicon, it gains several critical advantages:

- Cost Efficiency: While the initial research and development is expensive, in the long run, removing the markup paid to an external vendor like Nvidia can save vast sums, especially at the scale of a hyperscaler.

- Performance Optimization: A custom chip is perfectly tuned for the company’s specific software stack and operational needs, squeezing out every last drop of performance and efficiency.

- Supply Chain Control: Relying on a single external supplier creates a bottleneck. Having an in-house option guarantees supply and reduces dependency.

For years, Google had an internal monopoly on the TPU’s benefits. Now, by offering these chips to major competitors like Meta, Google is essentially saying, “Come build on our hardware, escape the Nvidia platform tax, and we will handle the supply chain problems.”

This is a bold attempt to fracture Nvidia’s software and hardware lock-in.

What’s at Stake and What Comes Next

The current AI landscape thrives on openness and standardization, which Nvidia’s CUDA has long provided. A fragmented hardware landscape, where different AI models perform best on different proprietary chips, could slow innovation by forcing researchers to rewrite or optimize their code for multiple architectures.

However, the competition is ultimately a good thing for the end user. It drives down costs, pushes the boundaries of performance, and forces companies to innovate faster.

If Google is successful, we will see a landscape dominated by a trinity:

- Nvidia: The enduring platform for general-purpose AI development, leveraging its massive community and advanced, universal hardware.

- Google (TPU): A potent contender for specialized, large-scale training tasks, attracting hyperscalers focused on efficiency.

- Other Custom Chips: The rise of other in-house silicon efforts from companies like Amazon (Trainium/Inferentia) and Meta further diversifies the market.

This push by Google isn’t about matching Nvidia chip-for-chip in every category; it’s about establishing the TPU as the most powerful alternative for the most lucrative customers.

Nvidia maintains its lead in absolute performance and ecosystem breadth, but the conversations Google is having are a clear signal that the era of a single, unchallenged AI chip king is coming to an end.

The biggest winners will be the researchers and businesses who benefit from this intensifying arms race for better, faster, and more affordable AI compute power.