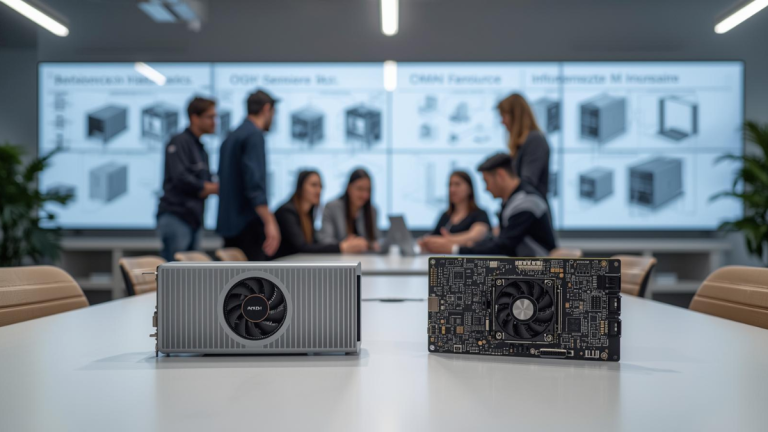

A researcher studies a next-generation neuromorphic chip that highlights recent neuromorphic computing breakthroughs.

When a cutting-edge large language model responds to a query, it uses more energy than a typical family home does in a day. Today’s artificial intelligence, while brilliant, is an energy hog, built on hardware designed for general computing, not the specialized, parallel processing of the human brain.

This is where neuromorphic computing breakthroughs enter the conversation, representing one of the most significant architectural shifts in hardware since the GPU. These breakthroughs are not just incremental updates; they are an attempt to reimagine computing from the ground up, drawing inspiration from the biological brain.

The Brain’s Blueprint: What Neuromorphic Computing Is

At its core, neuromorphic computing is about replicating the brain’s fundamental processing unit: the neuron and the synapse. Standard chips, known as Von Neumann architecture, separate processing (the CPU) from memory (RAM), forcing data to constantly travel back and forth.

This constant movement is what consumes tremendous power and creates a performance bottleneck.

The neuromorphic design discards this separation. It places memory and processing together, much like a biological neuron that processes information where it stores it.

Crucially, these chips use spiking neural networks (SNNs). Unlike conventional AI, which processes all data in every cycle, SNNs communicate through discrete electrical pulses, or “spikes.”

A neuron only transmits a signal when a certain threshold of input is reached. Imagine a light bulb that only turns on when it has a real piece of information to share, remaining completely dark and drawing zero power when it has nothing to say.

This event-driven, or asynchronous, processing is the source of neuromorphic computing’s incredible energy efficiency.

Why It Matters Now: The Efficiency Imperative

The contemporary AI landscape is defined by two forces: scale and cost. Models are getting larger, requiring more training data, and demanding increasingly powerful, power-hungry data centers.

The massive energy consumption associated with training and running today’s sophisticated AI is rapidly becoming a barrier to sustainability and accessibility.

This is why neuromorphic computing breakthroughs are a strategic imperative. The goal is to move complex AI out of giant data centers and onto the “Edge” where the data is generated: in drones, cars, wearables, and industrial sensors.

An Edge AI system powered by a neuromorphic chip could perform real-time object recognition or sophisticated natural language processing with a fraction of the power required by a conventional system, sometimes by factors of 1,000 or more.

The implication is profound: truly ubiquitous, real-time, sophisticated AI that is not reliant on a constant cloud connection or a massive battery. It democratizes AI, moving it from the realm of the hyperscale giants into everyday devices.

What’s at Stake: Beyond Energy Savings

The significance of this architecture extends beyond mere power consumption; it is about enabling new classes of applications.

- Real-Time Processing: Because the chips operate asynchronously, they are ideally suited for tasks that require real-time adaptation and rapid response, such as autonomous navigation or predictive maintenance in volatile industrial environments. The brain’s reaction speed is proof of this model’s potential.

- The Sensory Edge: Human perception is a low-power, high-efficiency task. Neuromorphic chips excel at processing sensory data from cameras, microphones, and inertial sensors. For instance, a neuromorphic vision system could focus its processing power only on moving objects, ignoring static background noise and saving immense power.

- A New Approach to AI: This technology forces developers to rethink how they code AI, shifting from the established deep learning model to SNNs. This steep learning curve is a current barrier, but it also fosters novel algorithms better suited for sequential data, like time series or movement patterns.

The ethical consideration here involves the control of this intelligence. If sophisticated AI can be placed on almost any device, the deployment of this technology requires careful thought regarding data privacy, security, and potential misuse, especially given the difficulty of auditing the “thought process” of a highly parallel, brain-like chip.

The Road Ahead

Major technology players and academic institutions are now deep into the R&D phase, developing and refining the materials and fabrication processes for these specialized chips. While large-scale commercialization is still emerging, the current breakthroughs validate the underlying theory: biology offers a superior blueprint for intelligence hardware.

Ultimately, neuromorphic computing is not designed to replace every standard chip; the Von Neumann architecture remains unmatched for general-purpose, sequential tasks like database management. Instead, it is poised to become the indispensable coprocessor for the most challenging efficiency and real-time computation problems in AI.

It is a future where the silicon brain works alongside the digital processor, creating a far more powerful and sustainable computing ecosystem. The next great era of AI will be defined not just by smarter software, but by hardware that finally understands the wisdom of the brain’s design.